Docker Swarm: Falls Short Of Perfection

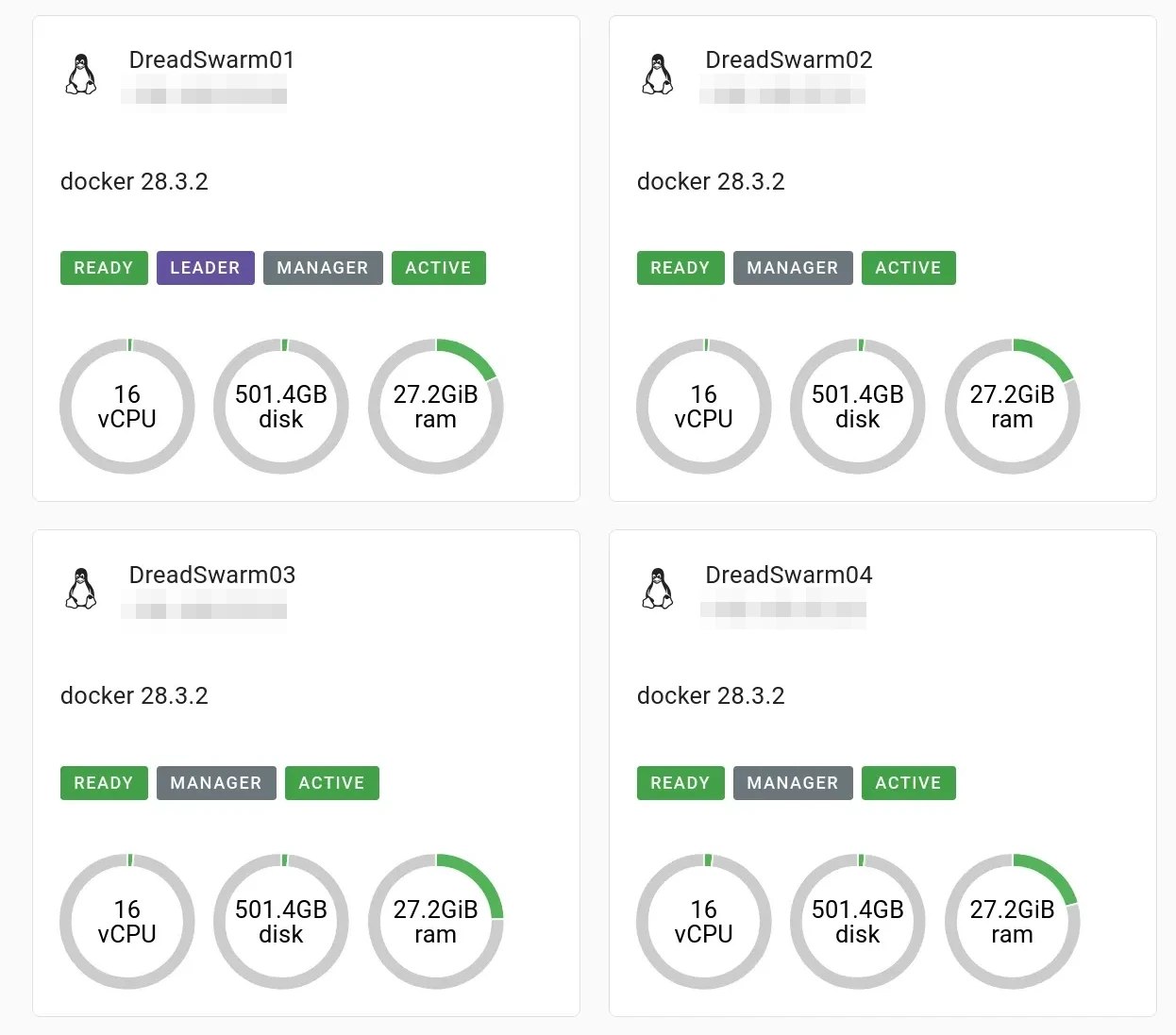

While I just wrote a glowing review of Docker Swarm, the truth is it's not nearly as capable as you'd need for it to be a total solution. Unlike the last blog post, this one gets a little more technical so I can properly explain a specific shortcoming with Docker Swarm. There are a ton of external tools you need to just get Swarm to where it's finally usable. For example, out of the box, there's no replicated storage. You either need a shared network storage for persistent data, or you need to use something like Ceph to replicate storage across the nodes. You also need a networking solution to give all your nodes a single IP they can all answer on, allowing you to have High Availability for your available network services. While that's a pain, those are problems we can solve easily with MicroCeph and KeepAlived.

What we can't solve easily is what I find the most annoying part of Docker Swarm: Customizing where and how containers are placed across the Swarm. This isn't a huge issue, but it's something that drives me nuts. Let me explain.

One scenario that I often run into: Web Servers. They're typically a stack, consisting of a web server and a database. I run lots of them, and I've deployed many of them across my Docker Swarm. However, I quickly found that Docker Swarm will take every container within a stack and force each component to run on a separate node on the cluster. For example, a web server will always run on a different server than the database.

What this means is that a stack will always divide the services across that stack between different machines. Is this a problem? That's a good question, and I'm not entirely sure. I would imagine that forcing every stack to rely on talking to another node would add overhead, and with it, latency. Is this a problem? Again, I'm not sure. To be safe though, I wanted to force all the containers in a stack to run on the same node at any given time.

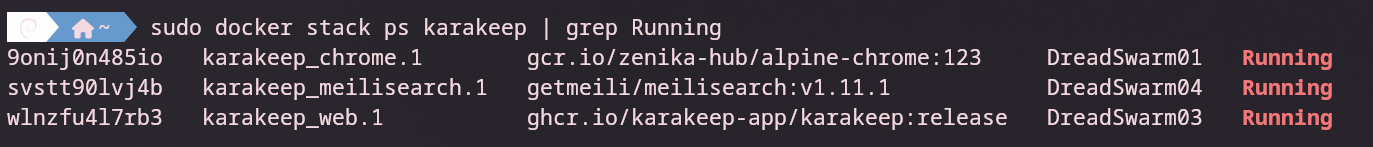

Hold up- that's not possible. At least, not in a way that retains High Availability. Technically, you can force an entire stack to run on a single node, but if that node goes down, the stack will not migrate to another node. That's no good- I want the whole stack to stay on one node unless the node goes down, then it can come up on another node. Sorry, Docker Swarm doesn't have your back there. That sort of design doesn't align with Docker Swarm's deployment philosophy. You're forced to spread your entire stack across the swarm, and you're going to have to accept any performance loss that may come with it. Below is a cut down compose.yml for karakeep, a stack with 3 different services: Web, Chrome, and Meilisearch.

services:

web:

image: ghcr.io/karakeep-app/karakeep:${KARAKEEP_VERSION:-release}

networks:

- net

deploy:

mode: replicated

replicas: 1

chrome:

image: gcr.io/zenika-hub/alpine-chrome:123

networks:

- net

deploy:

mode: replicated

replicas: 1

meilisearch:

image: getmeili/meilisearch:v1.11.1

networks:

- net

deploy:

mode: replicated

replicas: 1

networks:

net:

driver: overlay

attachable: true

By default, it's going to split these 3 services across 3 nodes, forcing them to communicate across the network. To me, that's inefficient. They're all minor services which should just run on the same box. In my opinion, Docker developers should enable me to couple the entire stack to one configuration- or perhaps make a service group which allows me to define parameters to the entire group. Then, I can have High Availability and efficient grouping of containers at the same time. Alas, the orchestration potential of Docker Swarm is quite limited.

I asked my AI assistant for help. Sadly, the only viable solution is a DIY one- write a script that can do this for you. That's DIY orchestration. At that point, I may as well bite the bullet and learn a robust solution like Kubernetes.

Where does that leave us? Sadly, simply accepting this inefficiency. So long as I don't notice a performance impact and we continue to have High Availability, there won't be an issue. Still, I can't help but wonder how hard it would truly be to run Kubernetes. Only time will tell when I am forced to make the switch, especially since it's rumored that Docker themselves are barely supporting Swarm Mode.